We spend the majority of our time in front of screens. It’s mostly one of computer/tablet/phone/tv1. These are largely platforms the user owns or controls. I’m surprised we don’t yet have more interactions with screens out in the world.

Face detection and object recognition technologies are now highly accessible, making it easy to use a camera to make a display interactive. In this post I’ll describe my starting place on a small project using this technology to create an animation designed to unnerve the user.

Things following you

Try to recall that creepy sensation you get when someone or something is looking at you. Now imagine having that all of the time. That is the unsettled feeling I want to evoke. A few ideas:

Poster for a new Lord of the Rings movie that has an Eye of Sauron that follows you as you walk into the cinema2.

Someone at the grocery store (during COVID19) whose shirt beeps and flashes red if you get within 6 feet of them.

I intentionally made these examples somewhat dystopian. There is an important societal reckoning taking place right now regarding tracking technologies (particularly in regard to its impacts on communities of color). I wanted to work on something that, while playful, would call to mind concerns of a ‘Big Brother’ or ‘watchful eye’ like figure.

Codepen example

As a starting place, I focused on animating an eye that would track a user that looked at it3.

Here is my first draft:If you want to set it up:

- Open a Chrome browser and enable experimental web platform features (currently only works on Chrome and does not yet work on Android, iOS, or Linux)

- Go to my codepen4

- Allow use of webcam when prompted

- For a better view, ensure you are on the ‘Results’ tab and press the F11 key to hide the browser bar

- You will likely need to refresh when opening or when resizing

If you want to use it to creep out the family members you are locked at home with, see the Additional actions section.

How I made it

To get the video and initial face detection set-up, I copied code from this github repo by Wes Bos. To animate the eye I used an html5 canvas element and JavaScript. The eye simply follows your position in the video. Though I did a few things to make the eye movement look more interesting:

- Rather than updating with every frame, it estimates your position based on the moving average of 10 frames, this makes the movement appear more smooth and softens the jitters of the algorithm constantly updating its estimate of your position.

- I used some trigonometry to soften the tracking so that the pupil’s movement would look more realistic at a distance.

- I also have the components of the eye slightly change shape and turn in or out depending on your position.

- However this is very much still a work in progress5 – fixing the eye tracking is the major focus area for Next steps.

Next steps

Improve position mapping:

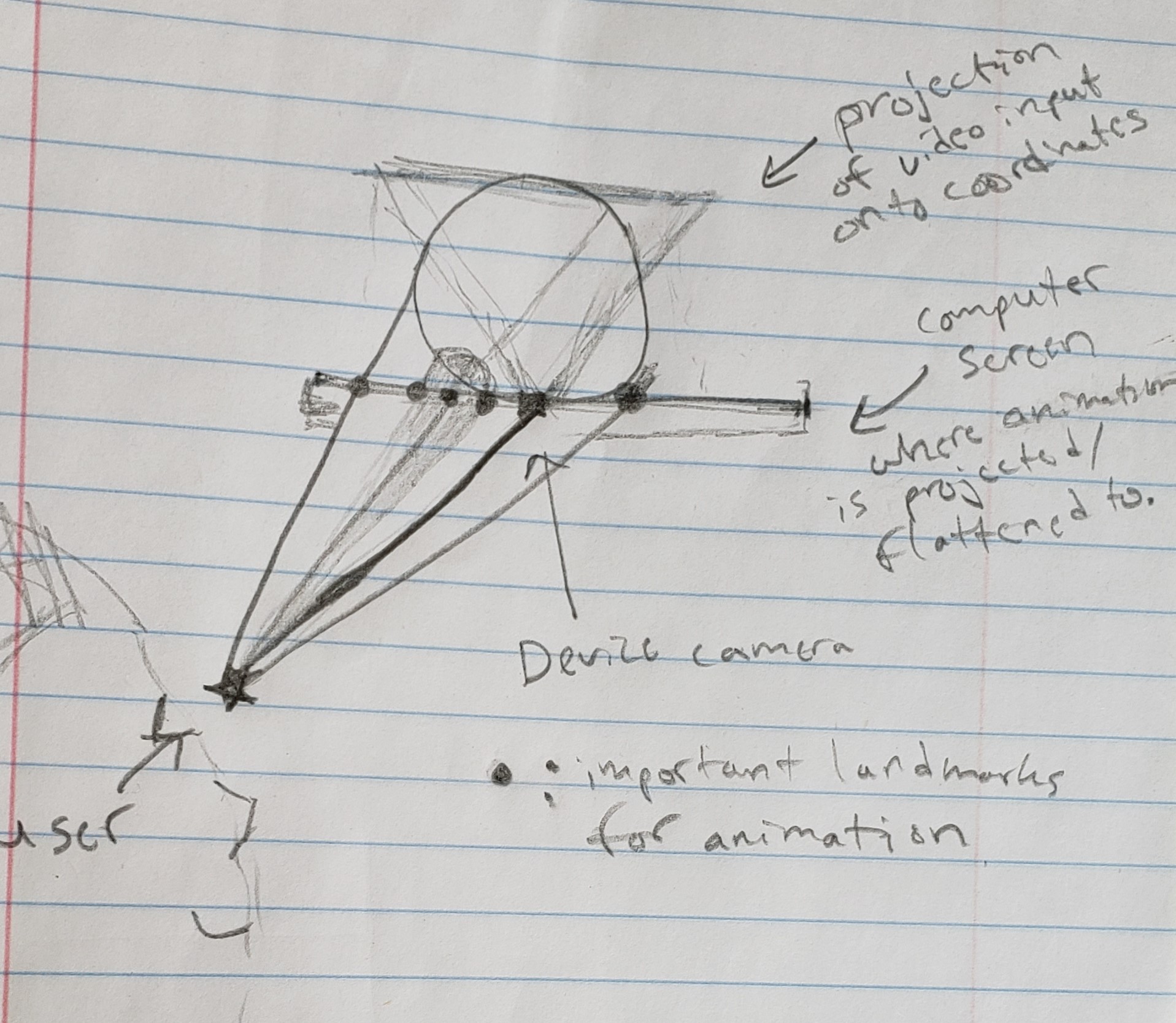

Using estimates of the length of facial landmarks, you can estimate the distance someone is from the screen. See relevant project on github6. Once you have an estimate of someone’s position, you can more accurately adjust the animation so that the eye looks like it is following the user through space7. Here is a ‘back of the napkin’ sketch of my mental model for the problem:

Diagram of key location points for animating eyeball with reference to a user.

Once you have an estimate of the distance a face is from the camera, the important points for the projection of the eye to a 2d animation can be filled-in (with just a little bit of trigonometry). Ultimately I’d love to do something like can be found at this github repo:

![]()

but tailored to where the user is standing. However this may be limited8, also the view would be tailored to a single user9.

Improve everything else:

The above improvements would require a great deal more sophistication in the animation. I’d also like to improve the code quality. All of these Next steps are largely aspirational – this project is far removed from my day job and I am inexperienced in much of the underlying technologies / software. Hence I’m unsure when I’ll pick this back-up10.

Learning path and resources

My initial plan (for building the eye tracking component) was to use the python bindings for OpenCV11 for the face detection. I would then use the open source video editing software, Blender (which can also run python scripts) to overlay an animation12. See example where someone uses webcam and Blender to demo their face animations on a character. A problem with this approach is that Blender is not a light-weight application. Hence I wasn’t sure how I would easily deploy it… so I investigated alternative approaches.

Near the end of this presentation by Cassie Evans on making interactive SVG images is where I learned about Google Chrome’s experimental shape detection API. I then found Wes Bos’s tweet on the subject.

😮 Did you know Chrome has a FaceDetector API? pic.twitter.com/wSwDdI8p1u

— Wes Bos (@wesbos) March 20, 2018

I decided to go this route because of the relative simplicity of the shape detection API and the ease with which I could then deploy a first draft through a Chrome browser. A problem was that I needed to learn some web development (or at least JavaScript) basics.

Preliminary learning resources:

- About 40% of the videos/exercises from the first three courses of University of Michigan’s Web Design series on coursera by Colleen van Lent

- The first few chapters of Learn to Code HTML & CSS by Shay Howe

- Most of the tutorials on HTML5 canvas elements by Chris Courses

Rather than using SVG’s, I ended-up just using a canvas element and JavaScript13.

Closing thoughts

Try it out or consider ways you can make something engaging or surprising for users. If you do, please let me know at brshallo on Twitter 😄.

Appendix

Associated Twitter post:

New addition to the livingroom, giant eyeball that follows you around when you look at it.

— Bryan Shalloway (@brshallo) July 21, 2020

See blog on how I made it using @chrome browser's #FaceDetection api and #JavaScript : https://t.co/S993yWZEpn pic.twitter.com/1ebjaGmPzC

Additional actions

- Ensure there is good lighting, tracking tends to get jumpy at a distance (honestly only works so-so at this point)14

- Plug device into a TV or larger display

- Get the camera lined-up (ideally is close to eye-level)

- Call your loved one into the room and wait for them to notice and start interacting with the giant eye ball that is following them

- For bonus points capture it on video and tweet it at me or with an appropriate hashtag (e.g. #eyeseeyou)

- For bonus bonus points, edit (or improve) the code and make some fun new animation

Maybe also Peloton, car display, watch, Mirror… (if you’re fancy).↩︎

Similarly, picture a portrait whose eyes follow you as you walk-by – similar to Mark Rober’s [video] (https://www.youtube.com/watch?v=sPgKu2E-jdw), but tracking you automatically.↩︎

And that could be easily shared across devices.↩︎

This is my first project using JavaScript (don’t expect much when it comes to code quality).↩︎

There are errors and most of the math here is almost nonsensical.↩︎

For my use-case though I may use face height rather than (or in addition to) face width – as cannot trust that people will be turned towards my camera and figure it is less likely they will tilt their head.↩︎

Though may be somewhat limited as a user has two eyes, not just one, so depth illusion might not work perfectly.↩︎

Afterall, we’re not working with holograms or special glasses.↩︎

The animation would become distorted for users other than the individual the animation is tracking.↩︎

But wanted to at least post this first draft↩︎

Open Computer Vision↩︎

Or some python animation library I might be able to find.↩︎

Again, don’t expect much when it comes to code quality.↩︎

Perhaps will fix / improve in future.↩︎